Robots already stand in for humans in some of the dullest and most dangerous jobs there are, handling everything from painting cars to drilling rocks on Mars. And if you listen to the hype about the potential of drones and autonomous vehicles, it's just a matter of time before robots do more. These future autonomous handymen and handywomen will deliver packages, take us to the airport, or handle less romantic tasks like shuffling freight containers and helping bedridden patients. The power of cloud software could make robots smarter and less expensive

The future of robotics lies in that nebulous cloud.

There's just one problem: robots are dumb.

Despite all of the science fiction over the past half-century that has foretold the coming of intelligent,autonomous mechanical beings that attain consciousness—Neil Blomkamp's Chappie being the latest—robots generally remain limited to the most basic of programmed tasks. Even the most advanced and deadliest of unmanned aerial vehicles depend heavily on their network tethers back to human beings. Otherwise, they're nothing more than glorified model aircrafts on autopilot

For robots to do tasks that are relatively simple for humans—like driving a car, fetching a tool from a toolbox, passing something to a human co-worker, or repairing a broken object—they need to be a lot more intelligent. But putting the computing power required for that intelligence onboard a robot, absent Isaac Asimov's positronic brain, would make robots prohibitively expensive, bulky, and power-hungry. (Exhibit A: Northrop Grumman's X-47B and the UCLASS robot fighter/bomber that will follow it).

But smart, mass market robotics isn't an impossible goal. In fact, the building blocks for such a change may exist today—it's the same technologies that have driven the "app" economy, software as a service, and the Internet startup economy. Specifically, researchers have increasingly looked to the power of cloud computing and high-speed networking in recent years to bring more cognitive capabilities to robots. Cloud technologies that were pioneered to help human beings process information appear to be the key for making robots act more intelligently, too.

The basics of the new botnet

Pepper, a humanoid robot developed by SoftBank Mobile and Aldebaran Robotics.

"Up to this point, the performance of a mobile robot is largely limited by the amount of memory or computation that it has onboard," Dr Chris Jones, Director for Research Advancement at iRobot, told Ars. "And if you're trying to hit price points that make sense, that computation and memory can be fairly small. So by connecting to the cloud, you end up with a lot more resources at your disposal."

Jones cites an array of possible examples: from more memory and more computation stored in the cloud to the boundless advantages of connectivity. By striving for a robot that's constantly networked, developers would be able to offload the theoretical burden of advanced perception approaches or navigation tactics.

We're already seeing some companies offloading an autonomous "brain" to the cloud. And it may not be too long before the same sorts of services used to build mobile digital assistants like Siri, Google's Voice Search, and Cortana are helping physical robots understand the world around them. The result could be a sort of "hive mind," where relatively inexpensive machines with some autonomous systems share a common set of cloud services that act as a group consciousness. Such a setup would allow a group of machines to constantly improve, adjusting operations as more experience is added to the collective memory. Theoretically, bots like this could not only interact with more complex environments, but they could engage people around them in a way that resembles a co-worker more than a calculator.

That's still a ways off, however. So far, industrial robots have in many ways followed a similar course to that of the PC. They've gone from standalone machines to networked devices, first over a proprietary protocol and eventually using open standards. In the 1980s, General Motors developed the first standard protocol for networked robots, called the Manufacturing Automation Protocol (MAP), based on a token bus architecture. MAP's networking would become the IEEE's 802.4 standard. MAP, and later other protocols that used Ethernet networking, allowed robots from different manufacturers to communicate and synchronize operations in real time.

"Networked robots can use a network infrastructure such as a wireless network or the Internet to talk to each other," said Markus Waibel, co-founder of the autonomous drone manufacturer Verity Studios AG and the non-profit ROBOTS Association. "In Cloud Robotics, robots can not only talk to each other but also to the cloud." And depending on how it's implemented, robots could use both shared cloud resources and a "personal" cloud, Waibel explained—the equivalent of a robot's Google Drive or iCloud.

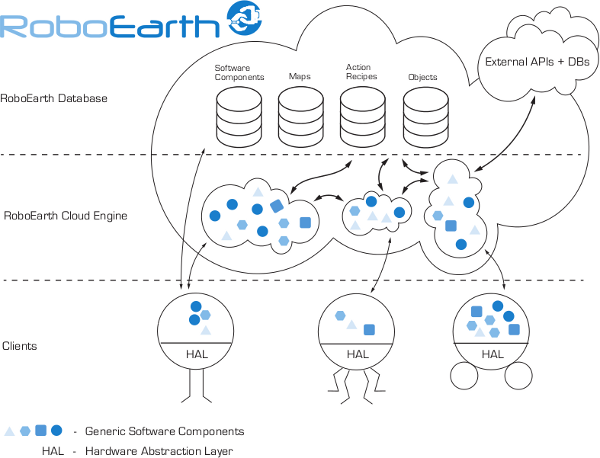

Waibel earned a PhD in robotics from the Swiss Federal Institute of Technology, and he was also part of the four-year RoboEarth project, a European Community funded program that created an open source platform for cloud robotics. That effort sought to address two ways to use the cloud to improve robotics: first by showing how conditions and learning experienced by one robot can be shared with other robots, then by demonstrating how the cloud "can allow robots to autonomously carry out useful tasks that were not explicitly planned for at design time," according to Waibel. By using a community of robots sharing a common, cloud-based software system, RoboEarth demonstrated that robots could dynamically be used by cloud software to carry out tasks that weren't hard-coded into the robots themselves. Some of these tasks were even tasks that no single bot could have done without human coordination.

The first hurdle—voice

That first RoboEarth goal is particularly important. Just as "Internet of Things" applications allow cloud applications to add functionality to electronic devices and to gather sensor and other data from them, cloud robotics applications can enhance capabilities of robots by processing streams of sensor data.

One of the most desired future capabilities is robots that can perceive the world around them. While something like Google's driverless car has the luxury of carrying large quantities of sensor processing hardware aboard, robots traveling around a refinery, a hospital, or factory to go fetch a specific tool or instrument for a task don't. And in some ways, the perception tasks they have are more complex.

"Forty percent of the human brain is used for perception, and perception is one of the most difficult tasks for an autonomous robot," Gill Pratt, Program Manager of DARPA’s Defense Sciences Office,recently told Robohub. "It’s very difficult to fit a computer with the size, weight, and power that you need to achieve really good perception onto a robot. The computer just gets too large, it consumes too much power, and it weighs too much. However, if you have access to cloud resources with lots of data and lots of computing cycles, they can help move that burden off of the physical robot."

One of the first and most obvious cloud-based tools to inch robots closer to perception is voice recognition. This particular challenge has a seemingly easier solution. The same cloud application programming interfaces used by smartphone applications, gaming platforms, and even "smart" televisions to understand voice commands can be incorporated into robotic systems connected to the Internet. "It doesn't make sense to develop our own voice recognition when there are folks like nuance that have very robust speech recognition engines that have a cloud API," said Jones. "That's an example of the type of existing cloud service that we could reach out and grab."

From there, things get trickier. It's not only important to recognize what was said, it's just as vital to understand what someone means. Cognitive systems, such as IBM's Watson, could help here. With such technology behind them, robots could understand what's being asked of them based on the context of the request. Alexa Swainson-Barreveld, IBM's Vice President for Watson products and solutions, told Ars that IBM has been partnering with SoftBank for that precise sort of cognitive capability for robots that act as human assistants (particularly in geriatric care).

"We're exploring opportunities where robots have cognitive capabilities to support people who are aging," Swainson-Barreveld explained. "The piece we've been working on in our labs is tightening the interaction with people—understanding human speech and interacting directly back with you, being able to respond back in a way that's appropriate. We're also starting to think about cognitive abilities in robots more broadly—how robots sense their location, where a sound is coming from, and things like that." These pieces, she said, are "the difference between working with an object that is frankly inanimate and working with a machine that is a partner."

A robot using LIDAR to map its surroundings. Connected to the cloud, this map could be shared with other robots

Another basic part of perception is navigation—understanding where you are in the world and how to get from there to where you need to go. Some of the fundamental elements of navigation, such as detecting things that are in the way and avoiding them, currently require some hard-wired capability on the robot. That may not change.

"I don't think it will be able to put object detection and avoidance in the cloud," said iRobot's Jones. "Taking existing capability and throwing it in the cloud isn't something that's going to be effective."

A loss of wireless network connectivity or a lag in processing would be a problem, he explained. But cloud capabilities can improve navigation. "When a robot is moving forward, if a person jumps in front of that robot, it has to be able to stop. However, there could be hybrid approaches that would work, where there's a baseline ability on the robot so it always has some fundamental navigation—it may not be smartest or best, but it's always on the robot——and when possible you can use the cloud to enhance that."

Jones cited situations where object recognition plays into navigation and avoidance. "If a robot is navigating down a long hallway and there's a box at the end of the hallway, that's going to take some time before it has to respond to it. Then it can use cloud resources for object recognition—determining that it's a cardboard box or a person can influence navigation strategy. If it's a person, it's more appropriate to slow down and be softer in navigation. You don't want to miss a person by a centimeter, so you could instruct the robot to move to the opposite side of the hallway and slow down as it approaches."

Today, basic robot navigation is already being improved by the cloud. RoboEarth's Waibel said that using cloud resources to process video streams from robots had a huge effect on their capabilities. "In one experiment we did, we streamed video key frames from a couple of cheap robots in Zurich to a RoboEarth Cloud Engine instance running on one of Amazon's commercial servers in Ireland. And suddenly, by combining these very low-cost robots with an incredibly low-cost commercial cloud service, you have multiple robots performing simultaneous localization and mapping, merging their maps and optimizing them in real time."

Big data = big brains

Voice and navigation are areas where robots can both collect and benefit from data. But for ultimate understanding, much of the heavy lifting can be done exclusively by people already collecting data for the cloud. For example, entity databases like those created for Microsoft Bing and Google Searchcould be tapped into to find a set of images for a requested item—like a water bottle—and then to build a set of possible matches for that item. Over time, that entity database could be updated with additional models based on individual robots' training (an operator showing it an object and defining it as a water bottle) and experience (sensor data that helps create better three-dimensional models for recognition). All that digital experience could then be extended to things like the best way to pick an object up, allowing a robot to "understand" where a bottle's center of gravity is, how rigid it is, and how much force is required to grasp and lift it.

This kind of collaboration is currently happening. Early on, research indicates that having another robot's point of view on objects often improves how well other robots can accurately detect something. "For example, object detection can be made much more robust using data from multiple cameras. We barely scratched the surface of what's possible," Waibel noted. One RoboEarth experiment used cloud analytics to compute the statistical correlations between objects in images from robots—"the typical distances between a knife, a plate, and a screwdriver, for example," said Waibel. "That gave a robot a list of likely candidates for object recognition, which greatly improved its object recognition rate."

This type of learning is one of the most powerful parts of the cloud robotics concept. Typically, robots have to be "trained" individually, or at least in a model-specific way, with humans guiding them through motions in a training mode or through model-driven programming. But some "knowledge" needed by robots transcends specific robotic tasks. The problem is how to put that knowledge in a form that can easily be shared.

"The best way of achieving that depends on the type of information shared," Waibel explained. "Many things are quite easy to share: object properties such as an object's weight, barcode, 3D model, or ideal gripping positions for specific gripper-object pairs are a good example. Others, such as actions, are hard."

To make it easier, the RoboEarth team "developed what we call an 'action recipe'," Waibel said. "[It's] a way to encode a robot's action in a high-level language that could be used and understood by robots with different software and hardware. That involved creating robot-specific action primitives (a bit like 'drivers' for your computer's peripherals) and a way to reason about and check requirements to complete an action, among other challenges."

A video summary of the RoboEarth final demonstrator project shows how robots can share information about their environment and access cloud data to find objects associated with a task.

Never asleep at the throttle or the watch

There are already examples of these complex, robotic systems that use cloud-based learning and big data analytics to improve performance—they just don't look much like robots. Consider General Electric's system for locomotives called Trip Optimizer. It's an onboard computer that takes over the throttle and brake of a locomotive to get the best possible fuel performance for a particular freight train's route and load. To make that decision, Trip Optimizer pulls from the railroad's digital map route data that include changes in elevation and local speed limits for rail traffic among other information.

Recently, we got a look at Trip Optimizer at GE's Global Research Center in Niskayuna in a locomotive simulator. While the system takes input from the rail crew on what the load's weight and distribution are, it can also sense and correct for differences based on the train's response to throttle and brake, essentially learning the train's performance characteristics as it goes along. Post-trip data can then be pulled back into the cloud to further optimize future trips. According to a GE spokesperson, the system has saved railroads an average of 32,000 gallons of fuel per year per locomotive since it was introduced.

One of the multipurpose robots being used in experiments at GE Global Research in Schenectady, New York.

But the current connection between robots and analytics can go much deeper than just learning how to handle a particular stretch of track. When coupled with networked sensors in industrial systems, and with analytical data on the back-end, low-cost robots can be used to automate functions like facilities' security and inspection, keeping an unblinking eye out for potential trouble and alerting systems (and people) to take proper actions. Rapyuta Robotics—a firm started by one of Waibel's students on the RoboEarth project—is using the work done by RoboEarth as the basis for a system of low-cost multi-robot security systems. (GE is doing similar work with its Guardian platform, an industrial surveillance system of robots that ties in to its industrial operations environment.)

Eventually, robotic systems could be completely directed by a cloud system based on analytics and high-level human instruction. Initiatives like the Defense Advanced Research Projects Agency's Instant Foundry Adaptive through Bits (IFAB) project envision manufacturing plants that can retool themselves for new manufacturing and assembly tasks based almost entirely on designs pulled down from a design cloud.

Robotic systems could also handle optimizing the logistics for those factories of the future, using analytics and planning software to tell them what to put in what container and the most efficient way to do it. Swarms of robots could handle any number of dull or potentially dangerous tasks, coordinated by cloud-based software responding to sensors and human instruction. The only limits on what cloud robotics could do are network connectivity, network latency, and the one thing that the cloud hasn't been able to duplicate thus far—imagination. The cloud-connected robot army of the future may not ultimately take our jobs, but they will certainly change them.